Breaking the habit of talking about Unknown-Unknowns like you know what it means. Part 2.

The Time-Bound Nature of Threats

If you landed here without reading Part 1 of this article, I recommend you head there and give it a quick read ;)

The quick backstory

We have inherited the old "Rumsfeld" knowledge matrix, which is straightforward, but lacks depth in capturing the different dimensions of knowledge and the role of time. We suggest that the confusion lies in the epistemological understanding of knowledge, and we propose a more nuanced approach involving time and awareness. This article argues for considering the role of time in defining knowns, unknowns, and the predictability of events. It presents a revised knowledge matrix incorporating the dimensions of past, present, and future knowledge to better understand the complexities of threat detection.

Blind Threat Hunting?

Let’s continue where we left off. The cyber security industry largely regards Threat Hunting as the art of seeking threats that we don’t know we don’t know (unknown unknowns). An example of this would be the activity of a threat actor that has dwelled in your network for months, exfiltrating data and disseminating back doors, unknown to your cyber team, avoiding the vast majority of your security controls. Common lore states that this is some of the most difficult and complex tasks that can be undertaken by a security team.

Following the knowledge matrix explained in Part 1, we can’t rely on:

standard detection rules based on known attacker techniques, the domain of known knowns.

IOCs, IOA (indicators of attack), and other derivative indices that are the result of novel research into zero-days and general threat actor TTPs, usually requiring higher degrees of expertise for their implementation, the domain of known unknowns.

So then, which types of activities would threat hunting perform, avoiding the use of known detection rules, IOCs, IOA, and generally, any form of structured evidence of system intrusions? Let’s not forget: we are supposed to not know that we don't know these things (unknown unknowns). It’s like looking for unseen (unknown) unseen (unknowns) objects. We could imagine such a process would hypothetically go this way:

Leveraging available datasets, threat hunters would craft queries that explore the data in a heuristic way: statistical analysis, stacking methods, and unsupervised machine learning algorithms (K-Means clustering, Hierarchical clustering, Neural networks, etc.). Let’s remember: in this hypothetical world of “unknown unknowns”, we don’t know what we are looking for, so we can only rely on data visualization and manipulation techniques hoping this will surface suspicious patterns. In other words: we will know it when we see it, not before. Hold on, I know you are thinking about the consequences of this statement, let’s keep pulling from this thread.

Let’s say that as a result of our efforts, for an “n” period of time, some suspicious patterns are revealed. How we determined these were “suspicious”, as opposed to “normal”, is not something we will explore in this article1. Let’s just assume we did find “suspicious” events which are indicative of threat actor activity.

A deeper investigation into the events uncovered via threat hunting would eventually reveal the presence of a threat actor, and DFIR would unravel the system of backdoors and past activity performed by the intruder.

Revisiting the narrative

This tale sounds nice, right? Well let’s now deconstruct this narrative with a few observations:

In the real world, how would you ever justify such a programme to the decision-makers that make budgets for such tasks? We are basically asking them to trust an open-ended process with a loosely coupled orientation based on playing with data in various ways with the hope we will strike gold and find “suspicious stuff”

The hypothetical approach described above completely dismisses the collective knowledge amassed and structured by the cyber community. Complicated, ordered cyber threat knowledge like MITRE ATT&CK would go unnoticed since we are only looking for “unknown unknowns”. By definition, there is no amount of previously organized knowledge that can help you anticipate these types of eventualities. This approach obviates deeply complex, highly structured, crowd-sourced, community-validated insight into attacker tactics, techniques, and procedures (TTPs) which organizes past knowledge into useful semantic categories. In other words, the search for unknown unknowns repels any attempt at leveraging any form of structured knowledge that would constrain the open-ended nature of this approach.

Considering that most of the open-ended nature of data exploration in the search for unknown-unknowns is purely reliant on the ability of the algorithms to reveal underlying patterns, and the ability of the human analyst to recognize what is anomalous and what is normal, how can the threat hunting process transition into an iterable state? How will the combined effect of machine-assisted analysis and human experience be repeatable and transmissible to other analysts? In other words, how can we avoid building castles out of air?

Due to the above points, how would you measure progress or stagnation, advancement or retreat? How is ROI calculated under these circumstances?

As you can see, when attempting to be true to the concept of unknown unknowns we may set unrealistic expectations and end up with unwanted consequences that are either inconsistent with the goals of threat hunting or make no sense in real world scenarios.

Epistemic Confusion

The confusion here is epistemological. That is, the repeated, industry-driven, and unquestioned use of the knowledge matrix popularized in the military world has permeated the cyber industry in a very automated way.

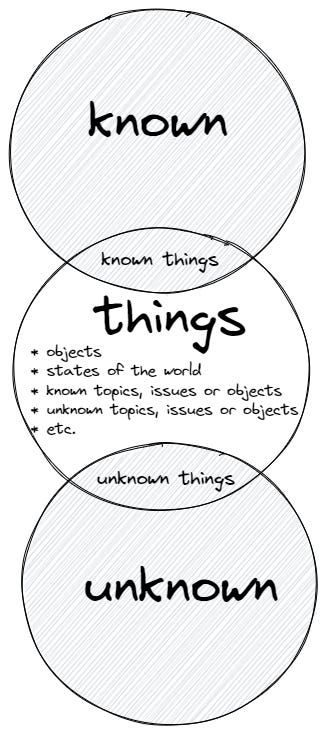

To solve this epistemological confusion we must understand a very important dimension of knowledge: knowledge is bounded and permeated by time. The act of knowing is a state of tension, where the knower perceives an object as different from itself. This object is posited in a differential relationship that allows “it” to be “known” by “someone”. So instead of talking about “knowns” or “unknowns” we should talk about already known or so far unknown (not yet known) things. The different aspects of these things are progressively revealed given enough exposure to time and our ability to sense emergent relationships. If we had to depict our knowledge matrix, it would be similar to this:

However, as we stated above, we can’t talk about knowledge without considering the dimension of time. Time brings to the table many properties of knowledge that would otherwise not have a place: eventuality (occurrences that can be either anticipated or not), emergence (occurrences that are the result of the complex interaction of elements, both known or unknown), awareness (we can’t be aware of all variables at the same time, our awareness window is limited), bounded applicability (knowledge is limited in its applicability to a particular domain, but its applicability can change over time), etc.

Let’s imagine how our knowledge matrix would look like now:

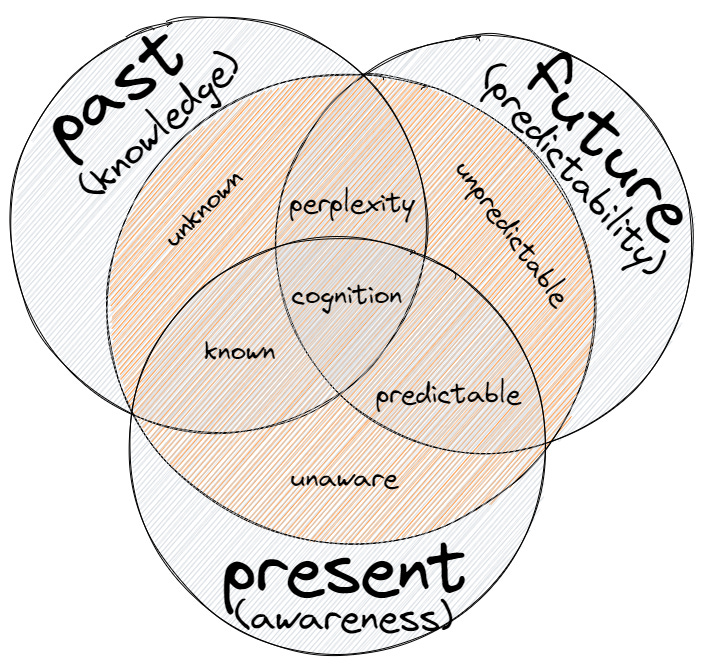

When adding time to the mix, we can re-signify the classic Rumsfeld knowledge matrix and talk about the different dimensions of time as they pertain to the way we describe our knowledge:

PAST. The descriptive dimension of things we already know or used to know. Known or unknown events: this is like describing our state of knowledge about something in the past, “back then, we ignored that we didn’t know about this or that”

PRESENT. The descriptive dimension of the knower, who is always situated in the present moment. Aware or unaware events: what is your current state of awareness in relation to the actual variables required to generate accurate forecasts? what is your current state of awareness about your past knowledge?

FUTURE. The descriptive dimension of anticipation, our way to describe things in terms of likelihood, probability, and possible scenarios. Predictable or unpredictable events: what are the predictable scenarios you can consider based on past knowledge and present awareness?

A more complete version of a knowledge model would look like this then:

In light of this new representation of our knowledge matrix, there are a few statements to be made:

The red circle in our diagram represents the "objective" world of things, only in relation to the observer and it's awareness window. There is no point talking about an "objective" world outside of the meaning that it is conferred to this term by the subject that perceives "things". Whether this is true or not, whether there is an absolute objective world out there, is irrelevant to this model.

Only things we are aware of, within our "window of awareness", can only be considered "known" or "predictable". Anything else is unknown or unpredictable, depending on whether we are describing those things from the point of view of the past or the future.

Perplexity is a state of confusion that arises when elements of the past and the future collide, without being distilled into the process of cognition, thus unable to be categorized as "known" or "predictable" artifacts.

Looking at things with this new lens, when the old "Rumsfeld" model talks about “known unknowns” or “unknown unknowns” it is basically talking about our state of awareness in relation to things of the world.

From this perspective, “unknown unknowns” actually means things that are not predictable because we are not aware of them. Only things we are aware of can be, in consequence, predictable, with a certain degree of certainty. This awareness is affected by circumstances and time. It is our relation with the systems we interact with (be them computer systems, society systems, environmental systems, etc.) that reveals what is considered known or unknown.

These interactions are always constrained by systemic conditions that create patterns in complex ways. The existence of these patterns is what makes collective efforts like MITRE ATT&CK possible: repetition and anticipation of human behaviour constrained by available hacking tools and vulnerable systems, encoded in the form of tactics, techniques, and procedures.