I was once a SOC Analyst

At the beginnings of my career in cybersecurity, I worked as a SOC analyst. This was an era when EDRs were not even a concept. Everything was just plain SIEM, raw logs, and vendors created most detection content. Detection Engineering was not even a thing.

I remember the days of the endless alerts queue, the countless false positives, the feeling of enthusiasm and the dizziness of overwhelming data streams.

I especially remember the many times I thought I had just found a surreptitious cyber attack. I would spend hours scavenging the internet for explanations to the data I was observing, correlating logs, talking to colleagues. Confirmation bias was tremendous. The deep excitement was palpable. The feeling that I had just found a reverse shell, a broad malware infection or hands-on-keyboard attacker activity.

I remember all the investigative work I did only to find out, with tenuous disappointment, that 95% of the time the source of observed malicious activity had a simple explanation: the client’s vulnerability scanner or a pentester.

Those were fun days. I looked at every sentence, word and character of a syslog entry or Windows Event Log with deep curiosity. I wanted to understand it all. My growth curve was off the charts.

But I also have very vivid memories of the toil, the grind, the neverending false positives. The feeling that I wasn’t contributing anything proactive to better protect the customers.

Security Analyst work can sometimes feel like being at the bottom of a cascade of alerts, trying to catch a speck of gold with your hands.

Turnover and burnout are high. Most of all at entry and mid-levels.

I’m sure many security analysts working at countless SOCs around the world can relate.

Over time though, I started to realise two things:

Alerts are information. Specifically, information about your systems.

Most security analysts out there will perform several investigative steps to triage and analyse an alert, usually involving OSINT and other forms of threat intelligence.

And before the AI evangelists raise AI as the solution to the problems above let me say “yes”, you can use automation and AI to enrich your alerts with intel and other information. So what? Suddenly, human triage and analysis are no longer needed? If that’s the case, why would you still need alerts?

Alerts are human attention-sucking artefacts that are there for the purpose of actioning something.

If human validation is not required as an intermediary between the trigger (alert) and the action (a change to the system), then you have 100% automation right there. No need for alerts. They would simply add needless friction.

As much as a lot of people would love to believe it, as of 2025, we are not there yet.

Try to explain to your customers and clients that their precious personal data, money or resources are 100% protected by automated Bots and AI agents, no human ever validating, triaging, or investigating anything.

The gurus of the automated-block-chain-quantum-powered-AI-driven world forget that humans are also an interface. You need to talk to our messy, biased and conflicting biological APIs too. We are far away still from the automaton CEO.

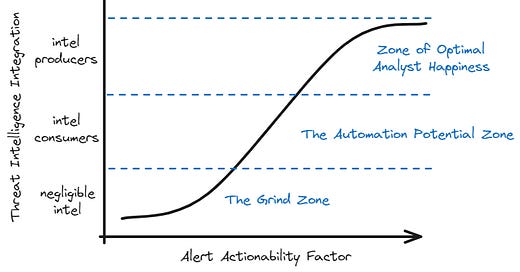

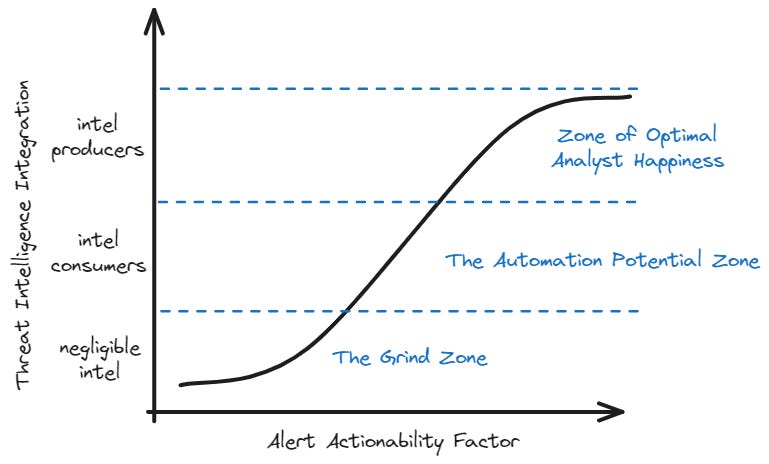

Alerts and security analysts are here to stay. But how can we reduce burnout, placate turnover and extract high-value information from our SOC?

Could we transform your SOC into an intel provider? Could your analysts perform more complex and better value-add data analysis? Could we proactively inject this information back into our security controls?

The Slap

Some time ago, I had a realisation: an alert is essentially internal threat intel.

This led me to the next more profound realisation: any refined information about the risks to your systems and data is intel.

Wait a second bro, are you saying that the kinds of alerts you create by engineering detections are intel? Yes, I'm talking about the types of notables, warnings, observables or alerts that an EDR or SIEM would raise.

If we boil it down to the essentials, an alert is:

A claim about the state of events in a system

The claim can be true or false but is an interpretation of the system's state at a point in time. I will provide a basic example first, a plain and isolated alert: “Suspicious network activity detected: Multiple failed login attempts from an unusual IP address on a server hosting customer financial data”.1A state of events that indicates a departure from the expected or desired state of the system

In other words: a risk. A state of affairs in the system capable of introducing disruptions that may impact the regular behaviour of the system. Following up on our example above, while failed login attempts can happen (users mistyping passwords), was there in the end a successful login attempt? A successful login attempt after multiple failures from an unusual IP address is a strong indicator of unauthorized access. This situation introduces a risk vector.Information that normally requires validation, triage and analysis

So that it can inform some action. We raise alerts because they should prompt some sort of action. If human attention wasn't required, it wouldn't be called an alert, it would simply be a fully automated logic chain.Information that is normally enriched with added contextual information

To build a situational awareness graph: who, what, when, where, why.

Threat Intelligence is:

A claim about the state of events in a system

The "system" happens to be the wider internet with all its nebulous digital metaverse and points of contact with our internal and external networks. In intel terms, we call it the "threat landscape". The claims made about this landscape can apply or not to your environment, and they can be true or false (not all intel is equally accurate) about the state of affairs it describes. It represents, however, an interpretation of observed data. Example of what you could find in a threat report: Recent observations indicate a surge in brute-force login attempts targeting financial institutions globally. Attackers are leveraging automated tools and botnets to systematically guess user credentials and gain unauthorized access to sensitive systems. This activity aligns with the broader trend of financially motivated cybercrime, with attackers seeking to exploit vulnerabilities for financial gain.A state of events that indicates a departure from the expected or desired state of the system

Information about potential or realised violations to the confidentiality, availability or integrity of data, perpetrated by threat actors, that indicates a significant enough departure from a desired state of the world conducive to peaceful business. Something signals danger and hits the business risk thresholds: "too close to home".Information that normally requires validation, triage and analysis

So that it can inform some action. If human brain attention wasn't required, it wouldn't be called threat intelligence. It is we, hominids, that experience this perceptual construct called a "threat".Information that is normally enriched with added contextual information

Threat intel is a narrative knowledge graph that is more or less enriched and informs situational awareness.

I'm sure there are many flaws in my analogy, but beyond the commonalities and differences you may find too, the point of this realisation that slapped me in the face like a rogue frisbee on a windy day, is that intel is refined information about a state of affairs (be it systems, people or data) with the purpose of prompting actions to reduce the risk of your business operations.

Intel should slap you in the face.

(gently of course, unless you are doing everything wrong).

If it doesn’t slap you in the face, then it won’t prompt any actions from you.

But what if you don’t need to merely look outside to gather intel? What if you could mine intel internally and look a things from a different angle?

Your SOC as an Intel Provider?

As mentioned at the beginning, what are the two main detrimental factors all SOCs struggle with? → Burnout and Boredom.

SOCs out there suffer from high turnover and burnout rates. Good and ambitious analysts will yearn for more and want to be part of more complex initiatives, solving bigger problems.

What distinguishes an average analyst or engineer from one with potential for senior or lead roles is not just deepening technical skills but something even rarer: their ability to look at problems from one level up.

Aspiring senior engineers and analysts are the ones who apply themselves to identifying the patterns in problems. They don’t just focus on the task at hand. Excelling at the task at hand will only make you better at doing more of those tasks.

Engineers who identify patterns realise that there always is a higher level order where the problem exists. Like a unidimensional line that is part of a two-dimensional rectangle or three-dimensional cube.

Over the long term, being good at simple lines won’t make you good at rectangles. In the same way, focusing solely on being the best rectangle drawer won’t get you to cubes.

If alerts engineered by a detection team and triaged by a security analyst can be considered internal threat intelligence, shouldn’t your SOC become an intel provider?

There are many ways in which we can do this:

Tag your data. Most SOCs already do this by default.

Map MITRE and OSINT metadata to Threat Actor profiles.

Develop data analysis reports.

Feed intel back into the system as threat-hunting or detection initiatives.

Tag your data

By tagging your alert data, you generate metadata that can be later used to either perform high-level trend analysis or to train machine learning algorithms that can predict whether a particular alert deserves a little, average or a lot of attention.

Some of these tags may also come pre-applied via detection engineering or automation.

Usual tags used by SOCs out there for their alerts:

Severity/Priority (

High,Medium,Low,Critical,Informational)Threat/Attack Type (

Brute-force,Phishing,Malware,DDoS,SQLi,XSS,Insider Threat,Data Exfiltration, use a more granular and comprehensive taxonomy based on your specific needs and common threats. MITRE ATT&CK can help inform this.)MITRE ATT&CK Framework (

Tactic:TA0006(Credential Access),TA0003(Persistence),TA0011(Command and Control);Technique:T1110(Brute Force),T1547.001(Boot or Logon Autostart Execution: Registry Run Keys / Startup Folder), etc.)Confidence Level (

Confirmed,Suspected,High Confidence,Low Confidence)False Positive/Benign (

False Positive,Benign,Expected Activity, etc.)Asset/System (

DMZ Server,Web Application,Endpoint,Database Server,VPN, Tag the specific asset or system affected.)User (

Admin,Employee,Contractor,System Account)Data Source (

Firewall,IDS/IPS,EDR,SIEM,Log File)Status/Action Taken (

Blocked,Quarantined,Investigated,Remediated,Needs Review)Evidence Collected (Registry, Files, etc.)

Map MITRE and OSINT metadata to Threat Actor profiles

Your first alert triage will normally reduce the volume of alerts that deserve attention by filtering out initial noise.

The remaining alerts, the ones deserving further investigation, should be accompanied by extensive OSINT investigations.

Perhaps a suspicious logon attempt from an unusual public IP to a high-risk service that is part of the infrastructure of your crown jewels ends up being a false positive, but what if you follow the trace of that IP?

what ASN does it belong to?

are there IPs in that ASN block that are or have been associated with malware callbacks or threat actors?

if you perform OSINT pivoting and find various samples of malware calling back to sibling IPs inside the same ASN, what do these samples have in common?

are there any threat actors out there known to actively exploit the types of services (e.g. IIS, Apache HTTP Server, etc.) the alert is pointing to?

Your investigation might return a false positive, but in order to arrive at that you had to well… perform an investigation. In doing so, you will find a lot of information that can be mined and used to further protect your systems.

Granted, the correlation factor is low. There is a huge element of serendipity in this approach.

But in researching information that helps you decide whether to escalate or discard an alert, you usually stumble upon useful information that can be operationalized.

An alert is an artefact that was created by a detection engineer somewhere along the chain (3rd party or in-house) and only made it to your data lake because someone decided it was significant enough to indicate a potential attack technique. This decision is made by act or omission, intentional or implied, but it’s a decision nonetheless.

If this attack technique is significant enough to be highlighted, why wouldn’t it be significant enough as a trigger that enables the investigation of which threat actors and which IOCs match those patterns over the last 6 months?

Threat Intelligence Teams out there don’t always have better criteria to choose one campaign or threat actor over another. Starting with the attack paths and techniques your organisation thinks are the most relevant is as good a start for OSINT as any (I will leave the need for threat modelling for another post).

We are mostly looking to mine relevant and usable IOCs here. They are perishable yes, but useful too if consumed at the right time and disseminated to your blocking controls.

Grab the information your security analysts produce with sweat and tears and map it to available threat actors.

You are NOT doing attribution here, you are simply doing correlation. Mapping will help you create a map of which techniques you mostly observe triggering and which threat actors they are associated with.

Even all the commodity attacks blocked at your perimeter like phishing emails with weaponized attachments can be a source of intel your SOC could utilise:

what are the most common types of malware blocked at the perimeter?

who are the most frequently targeted users? are there any patterns there?

if you detonate some of the samples in dynamic analysis environments, which domains and IPs are they calling back?

can you gather the list of callback IPs and domains and preemptively block them in case there are weaponised docos that can evade your perimeter controls?

You can see where this is going… bring in the lens of threat intel to your SOC operations.

Develop data analysis reports

Give your analysts the chance to collate all the data gathered in the previous steps.

Challenge them to craft meaningful data analysis reports:

What’s the trend of TTPs whose alerts trigger in your environment? —> you need to ask yourself why this happens

Which clusters of activity were investigated during the analysis of an alert?

What are the top 10 FP that need to be urgently tuned? —> this helps reduce the grind

How many IOCs did the SOC mine from the internet and applied to security controls? —> demonstrates value add and proactivity

What is the MITRE mapping from TTPs —> Alerts —> Threat Actors? —> this could shed some light on neglected areas

How many of your alerts are mapped to high-risk assets or crown jewels?

Allow your analysts to move one level up in strategic thinking, challenge them to analyse the high volumes of meta-data collected during a sprint or a month and produce relevant metrics.

Auf wiedersehen

I know some of the ideas put forward here have lots of caveats, I don’t intend to offer instantaneous solutions to all our problems.

I’m more about kicking off the conversation.

What do you think about this article?

I will enable a chat on Substack for all the subscribers so you can air your voice there :)

Why is this a basic example? because there is no comparative risk weighing or correlation. A better example would include a risk score attached to an entity like the IP address, the usernames employed (in case of password spraying), the targeted server, etc.

For those of you interested, we started a little discussion thread around this topic on Substack for free subscribers 🥸

This might be the overlooked part of a SOC function. SOC can be a data source for intel directly attributed to threats that are targetting your organization. If this on the list of priority intelligence requirements, then a workflow to push atleast this tactical intel data is required.

I liked how you mentioned transforming this function in to strategic intel provider. I assume that more achievable because there would be analytics overlap with what a TI analyst would do?

I am wondering what level of operational maturity would a SOC need to widen the scope of pivoting in the analysis.